Anaconda Assistant Brings Generative AI to Cloud Notebooks

Nanette George

Jim Bednar and Martin Durant

It is no secret that every scientific, engineering, or analytical discipline has its own specialized computing needs. In fact, many of these disciplines have developed entirely separate sets of tools for working with data, covering data storage, reading, processing, plotting, analysis, modeling, and exploration.

Unfortunately, most of these existing stacks are based on outdated architectures and assumptions:

It is time for a better way, and the Python community has created it!

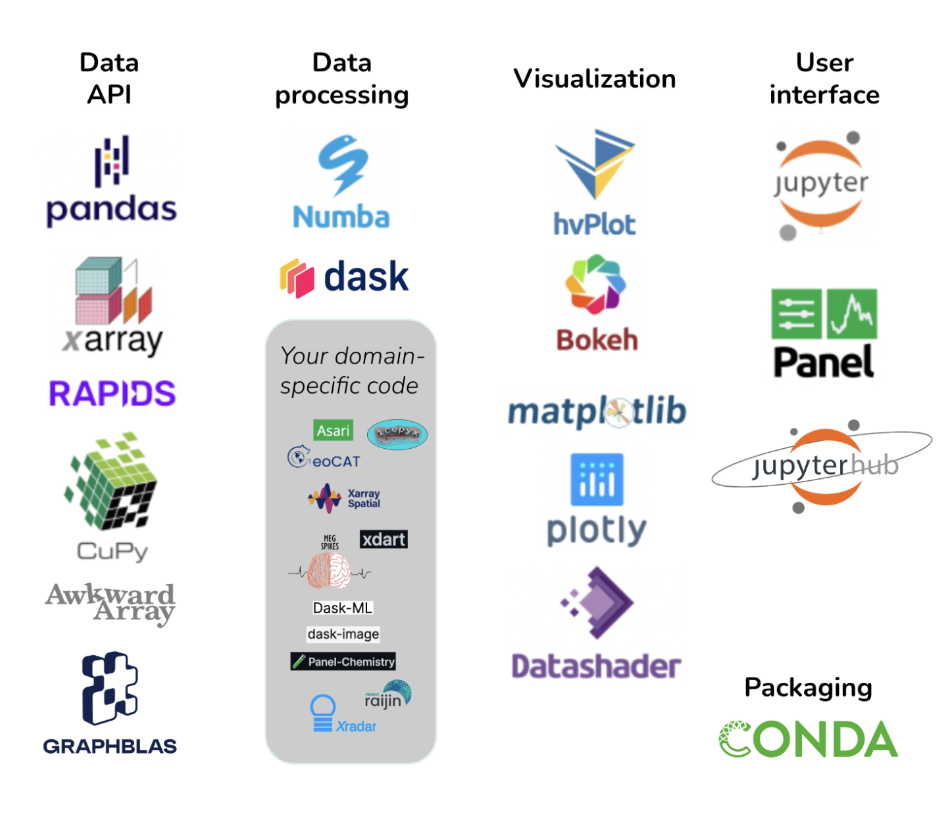

Pandata is a collection of open-source Python libraries that can be used individually and are maintained separately by different people, but that work well together to solve big problems. You can use the Pandata website to identify which of the thousands of available Python libraries meet Pandata standards for scalability, interactivity, cloud support, and so on. Pandata includes Parquet and Zarr data formats, the Pandas, Xarray, and Rapids data APIs, the Numba and Dask high-performance computing tools, the hvPlot, Bokeh, Matplotlib, and Datashader visualization tools, the Jupyter and Panel user interface tools, and many more!

Pandata is a scalable open-source analysis stack that can be used in any domain. Instead of using tools specific to your domain, you can use modern Python data science tools that are:

Note that many Pandata tools are commonly used in the field of data science, not any particular scientific or research domain. You may not think you are doing data science, but data science tools are simply ways of working with data that are applicable across many disciplines! Data science isn’t just for artificial intelligence (AI), machine learning (ML), and statistics, although it supports these areas well, too.

There are no management, policies, or software development associated specifically with Pandata; it is simply an informational website in GitHub set up by the authors of some of the Pandata tools. Check out the website, consider using the tools, and if they meet your needs, use them with the confidence that they will generally work well together to solve problems. Feel free to open an issue about what to do with Pandata if you have any questions or ideas.

There are lots of examples online of applying Pandata libraries to solve problems, including:

You can download and use any Pandata package in any combination and enjoy having all this power at your fingertips. See the Pandata paper from SciPy 2023 for more information.